Richard Wiggins is one of the foremost critics of smart beta ETFs, and his article for Institutional Investor on the topic went viral earlier this year. Wiggins, who works as an asset manager at an important institution, takes a highly critical view of smart beta, arguing it is based mostly on pseudoscience. We interviewed him recently and asked him to expand on his positions. Below is the transcript.

ETF Stream: You argue that smart beta is largely built on "p-hacking", which might be a new term to some of our readers. What is p-hacking and how does it effect smart beta?

Richard Wiggins: "P-hacking" is one problem; "HARKing", which we'll discuss below, is another.

P-hacking didn't exist before computers. But it's important for people to become familiar with it because it's what smart beta based on. If you understand p-hacking, you'll understand some of the research supporting smart beta is dodgy; some is complete bunk.

P-hacking is where researchers play with data eligibility specifications until they get the results they want. It's where analysts run thousands of correlations and regression analyses and only report the ones that suit their agenda. It is so widespread throughout science that many published results are false positives.

P-hacking is probably easiest to understand if we use a non-financial example. There's a professor at Cornell's prestigious food psychology research unit called Brian Wansink. He became a social science star - appeared in Oprah, the New York Times, and the rest - for "discovering" that weight loss is possible without really needing to diet or exercise. But it turned out his research was bunk: he p-hacked. He would encourage his underlings to tweak data until they found something publishable and, preferably, outrageous enough to go viral. This is all laid out in a great Buzzfeed article called "The Inside Story Of How An Ivy League Food Scientist Turned Shoddy Data Into Viral Studies".

Serious research is also susceptible. For example, a 2010 paper by Harvard professors Carmen Reinhart and Ken Rogoff argued that national debt lowers GDP growth. The study received a lot of media attention at the time, partly because it told right-wing politicians what they wanted to hear during Obama's first term. But the study was also bunk. A student a few years later went through their paper and found that they cherry-picked their data.

These examples show how social scientists can munge, bin, constrain, cleanse and sub-segment data sets to tell a story that they want. That's p-hacking.

The second problem is "HARKing" ("Hypothesizing After the Results are Known"). As the name suggests, it's when researchers formulate their hypotheses only once they know a study's outcome. It's easy to get a positive result because you're coming up with the theory (hypothesis) after you've seen the results. Science is meant to work the other way: you formulate a hypothesis then do a study to see if the hypothesis holds. Using HARKing, the chance of finding patterns in random blips increases and the effect sizes found will be larger than the true effect sizes.

We see this in finance. The bestselling book Good to Great by Jim Collins did this when he identified 11 stocks that outperformed the market and then described common plausible characteristics and traits. The book lauded companies like Circuit City and Fannie Mae, which ended up being terrible investments: Circuit City went bankrupt in 2009 and Fannie Mae hit $1 a share in 2008. This problem plagues a whole genre of books on formulas/secret-recipes for a successful business, a lasting marriage, living to be one hundred.

We see this in the whole "evidence-based investing" movement and, of course, in smart beta.

More seriously, you argue that the research methods backing up smart beta are "in the process of being discredited." What should investors know?

Practically every modern textbook on scientific research methods teaches some version of the 'hypothetico-deductive approach'' but we've been misapplying it to such an extent that legendary Stanford epidemiologist John Ioannidis wrote a famous paper titled

Why Most Published Research Findings Are False

. You're not familiar with it. Of course, you aren't. You don't read scientific journals and there's no incentive for anybody to bring it to your attention.

In 2016, the American Statistical Association published an extraordinary document called "Statement on Statistical Significance and P-value" reiterating that 'a p-value does not provide a good measure of evidence regarding a model or hypothesis'. It cannot answer the researcher's real question: "What are the odds that a hypothesis is correct?" Scientist's overreliance on p-values has led at least one journal - Basic and Applied Social Psychology - deciding it will no longer publish p-values.

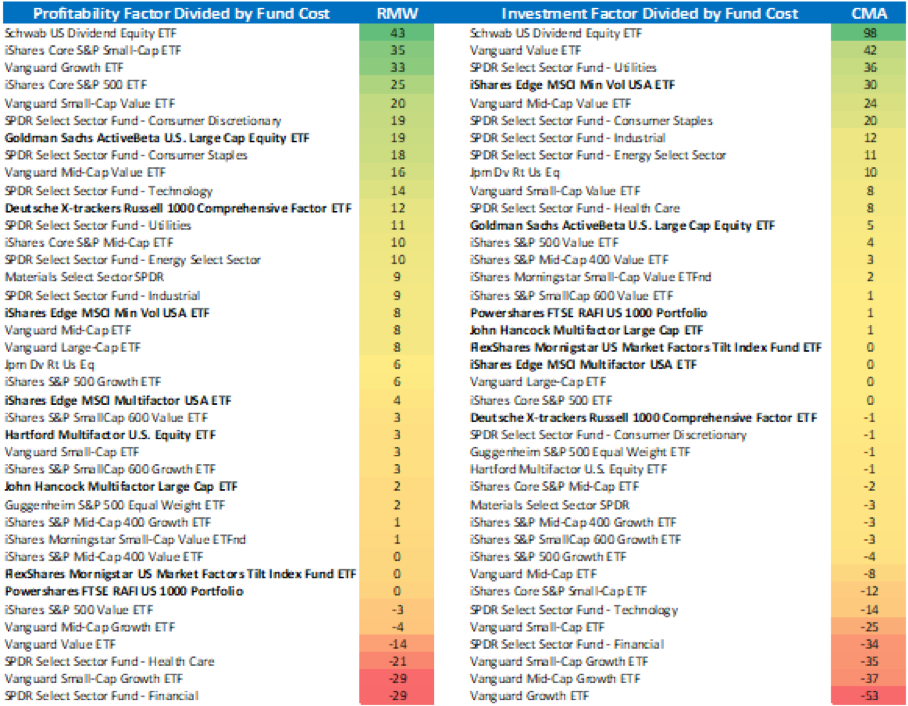

You also note that different factors can cancel each other out. You call multi-factor ETFs a "bowl of mush". What evidence is there for this? Can the different factors not be timed separately?

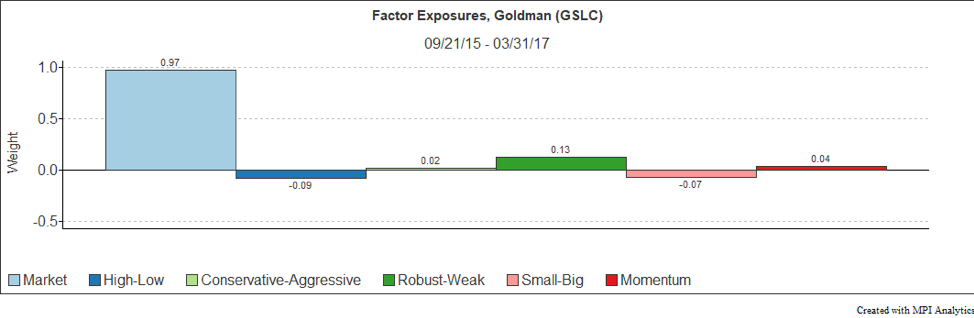

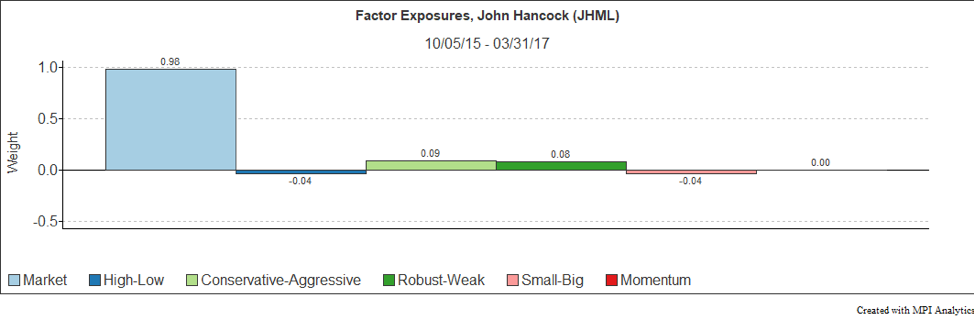

The premise of multifactor investing is kind of like the "chronosynclastic infundibulum" in Kurt Vonnegut's novels: where a person can be at different points in time all at once, and different kinds of truths fit together. A lot of the "alternative weighting" within popular smart beta products is rather easily decomposed, with the resulting exposures perhaps not as distinct as investors would expect from the marketing. Tuomo Lampinen has a great website called PortfolioVisualizer.com and we explored how easy it is to clone smart beta and the challenge of replicating multi-factor products isn't as great as it seems.

There is nothing particularly novel about what they are doing; they're theories and counter-theories, and they're mixed into one so Goldman's "GLSC" and Morningstar's "TILT" multi-factor products deliver only incidental factor tilts. Smart beta investors are buying a suspiciously limp lottery ticket.

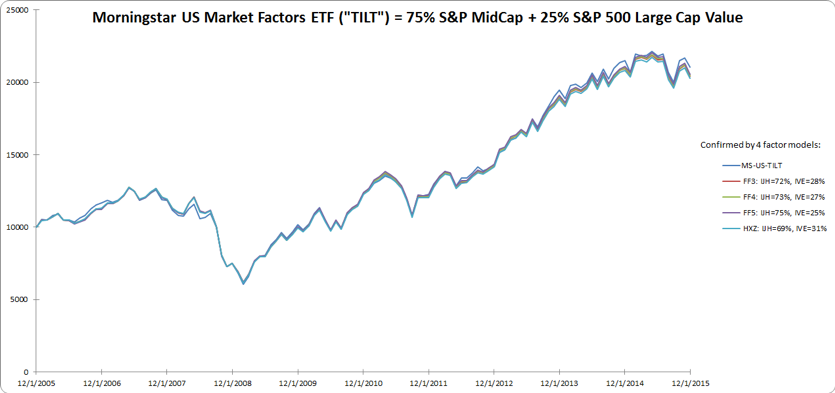

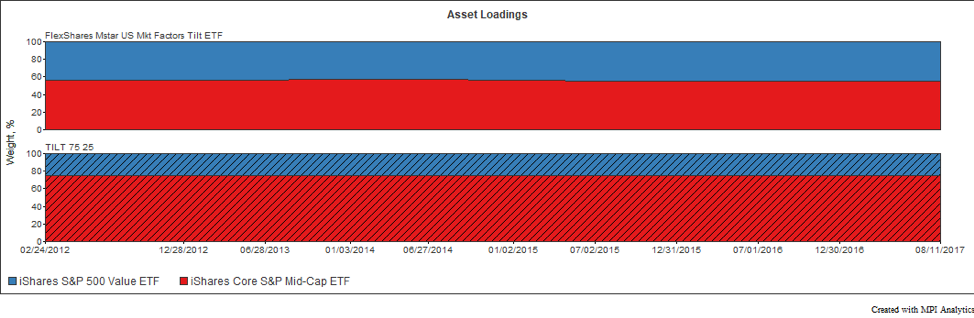

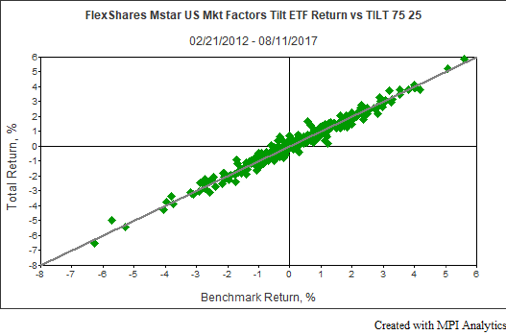

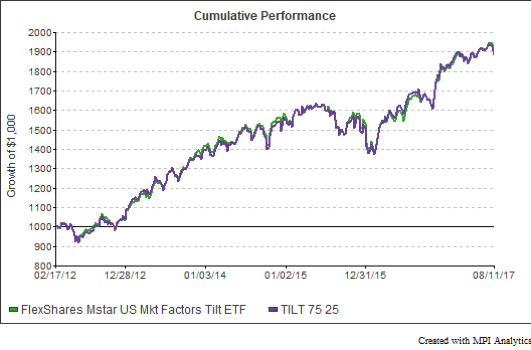

Morningstar's US Market Factor Tilt ETF (TILT) is a poster child for cloning because it has very minor size and value tilts. TILT can be easily replicated with two index funds with an expense ratio 50% cheaper. The growth of a dollar charts over-lap perfectly. The TILT return series has >=99.5% R squared on all the factor models. Using a 10-year clone, we essentially get a mix of three quarters of IJH (S&P 400 mid-cap blend) and one quarter of IVE (S&P 500 large-cap value).

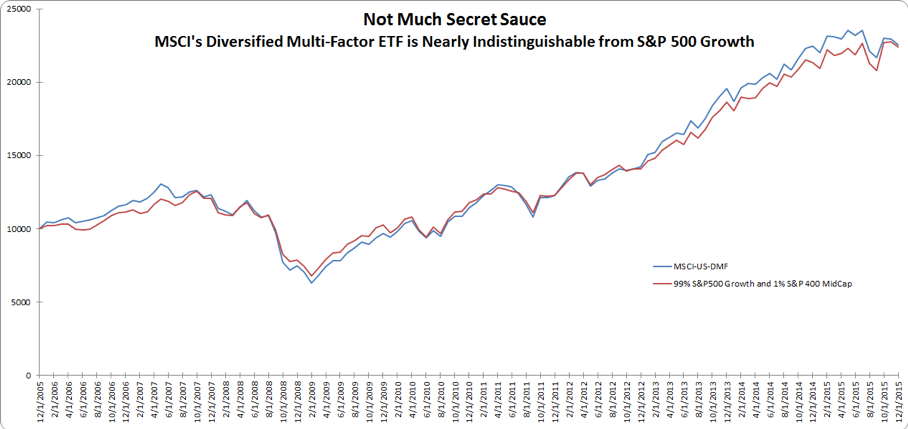

Smart beta was designed to be an improvement on capitalization-weighted indexes but, ironically, these heavily engineered multi-factor indexes can be replicated using a garden-variety mix of the generic of capitalization-weighted indexes that they were designed to replace. The ease of replicating these products by linearly matching their factor loads speaks to the bogusness of the approach. The absence of factor exposure in MSCI's Edge Multifactor USA ("LRGF") product renders it essentially identical to the S&P 500 Growth index.

The same is true of the John Hancock Multifactor Large Cap ETF (JHML); the product of this layer cake of factors is a return stream that looks exactly like the SPDR Russell 1000 ETF. My take is that a salmagundi of investment styles isn't such a great idea.

In addition to potential savings of hundreds of thousands in fees for large institutional investors, the use of low cost index funds would simplify their manager oversight and due diligence. Arguing for a more ecumenical approach, companies and advisers are now busily recombining factors into different offerings, and coming up with new factors but the inherent cancelling effect between factors makes the natural temptation to combine all factors into a single strategy faulty.

If you're interested in how this works, I wrote a paper called "Cloning DFA - A Cheaper, Factor-Mimicking Portfolio" in the Journal of Indexes; Nov/December 2014 but the real experts are at Markov Processes International. MPI has published some excellent papers on this very topic.

You call smart beta a "modern superstition". Do you think there's any role for smart beta products in portfolios?

Right now, I'm a dissenter. Most of these new factors are coming from product literature. But the historical data, which has been used as the basis of the argument, is yielding more ambiguous results. French-Fama's original factors (value and size) haven't worked since inception. So why would I bother with the 'new' ones? We're engineering exposure to factors that might not exist.

A lot of research on these factors let the market beta jump up and down so there's a built-in market-timing element that gets blurred into the so-called factor return. Wes Gray, Corey Hoffstein, and Felix Goltz have done some good research on the dynamic market beta within factors. The logic to combine things that back-test well and get even better back-tests - i.e. 'efficient combinations of high Sharpe ratio assets will have even higher Sharpe ratios' - won't work if there was nothing there in the first place. Published evidence is unrepresentative of reality. I'm just not a fan of back-tests; they should be illegal but they're ubiquitous. Every result is a temporary truth.

Investors need to be alert to the polyvalence and ubiquity of factor exposures. Investors already own some of these premiums and they pay a lot less for them. In fact, they probably sold some to raise funds to buy the multi-factor products. Capitalization-weighted indexes provide very low-cost exposure to smart beta factors.

You also note that these problems extend beyond smart beta and into environmental and social investing. Is there a lesson here perhaps for those considering feminist or environmental ETFs?

Yes, definitely; but it's dangerous to speak the truth. In fact, my wife proofread the article and suggested that I enroll in the Witness Protection Program. P-hacking makes it easy to get doxastically-driven studies to 'work' so you can come up with an appealing story and then go sell the hell out of it.

It's really easy to kid yourself. The latest batch of studies by McKinsey, the Clayman Institute, Credit Suisse, MSCI and others claims that greater gender diversity at senior leadership levels leads to better performance but I'm totally not buying it. It's a pure color job, but a good one. The SPDR SSGA Gender Diversity Index won 'best ETF of 2016' and CalSTRS invested in it but I'm sure they worked backward to mushroom pick the 190 companies out of the largest 1,000 available to be included and meet their diversity standards. Defining what it means to have more women at the top of the house was pretty arbitrary. Women are hardly represented at all in certain industries so they had to do some janky stuff to get industry representation.

Equally wishful but unbelievable are recent studies that have found that stocks of companies that rank high overall on community, employee relations, environment, and human rights earn higher returns than other stocks. Oh, spare me. There's even a Barclays Disability Index with a "Return on Disability" model that claims that companies that 'do disability well' are also generally responsive to their customers, focused on finding great people, understand efficient process and outperform their competition in terms of value creation. Somebody has clearly jumped the shark. C'mon who are we kidding? Obviously, this is all nonsense. It would be nice but it's untrue.

It's wishful. State Street just issued a research report on titled "Harnessing ESG as an Alpha Source in Active Quantitative Equities." The idea is that 'environmentally efficient firms consume fewer resources and produce less waste than competitors' but I'm not sure how you'd measure that across sectors. To their credit, they note that empirical research on "E" and "S" is less-than-conclusive. It would be nice if it was true - like nice guys finishing first - but they're calibrating a lot of intangibles when they cue up the back-test. Truth is after all, non-specific.

The bottom line is that a lot of garbage research has been politely allowed to pile up and fester unchallenged. Like the catch-as-catch-can studies that say wine, coffee and chocolate are good for you.

Wall Street excels at selling things. Throw away the marketing binders and pitchbooks and don't be so trustful of the media. They're not as impartial as you think. As Kathryn Saklatvala commented, "We largely report on one thing: what people want to tell us. People with something to sell and money to spend on creating press releases. We're little more than extension of industry PR efforts…eating marketing by the shedload.The voice with nothing to sell is rarely - if ever - heard."

Skepticism is healthy. Re-search means 'look again.' Always ask, 'Who is funding these papers?' and 'Why?' Recall Kierkegaard's de omnibus dubitandum est - everything must be doubted.